其实最想要的是保留project配置,这样客户端的DSN配置统统不用改,报错数据倒是可以不要。

有文章已经给出了配置迁移教程:https://medium.com/@avigny/sentry-on-premise-migration-dc0e42f85af4

不过我在过程中遇到了奇怪的错误

root@b06d49e21a01:/# sentry import data/files/sentry_export.json [832/1970]

00:27:04 [WARNING] sentry.utils.geo: settings.GEOIP_PATH_MMDB not configured.

/usr/local/lib/python2.7/site-packages/cryptography/__init__.py:39: CryptographyDeprecationWarning: Pytho

n 2 is no longer supported by the Python core team. Support for it is now deprecated in cryptography, and

will be removed in a future release.

CryptographyDeprecationWarning,

00:27:08 [INFO] sentry.plugins.github: apps-not-configured

Traceback (most recent call last):

File "/usr/local/bin/sentry", line 8, in <module>

sys.exit(main())

File "/usr/local/lib/python2.7/site-packages/sentry/runner/__init__.py", line 166, in main

cli(prog_name=get_prog(), obj={}, max_content_width=100)

File "/usr/local/lib/python2.7/site-packages/click/core.py", line 722, in __call__

return self.main(*args, **kwargs)

File "/usr/local/lib/python2.7/site-packages/click/core.py", line 697, in main

rv = self.invoke(ctx)

File "/usr/local/lib/python2.7/site-packages/click/core.py", line 1066, in invoke

return _process_result(sub_ctx.command.invoke(sub_ctx))

File "/usr/local/lib/python2.7/site-packages/click/core.py", line 895, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "/usr/local/lib/python2.7/site-packages/click/core.py", line 535, in invoke

return callback(*args, **kwargs)

File "/usr/local/lib/python2.7/site-packages/click/decorators.py", line 17, in new_func

return f(get_current_context(), *args, **kwargs)

File "/usr/local/lib/python2.7/site-packages/sentry/runner/decorators.py", line 30, in inner

return ctx.invoke(f, *args, **kwargs)

File "/usr/local/lib/python2.7/site-packages/click/core.py", line 535, in invoke

return callback(*args, **kwargs)

File "/usr/local/lib/python2.7/site-packages/sentry/runner/commands/backup.py", line 15, in import_

for obj in serializers.deserialize("json", src, stream=True, use_natural_keys=True):

File "/usr/local/lib/python2.7/site-packages/django/core/serializers/json.py", line 88, in Deserializer

six.reraise(DeserializationError, DeserializationError(e), sys.exc_info()[2])

File "/usr/local/lib/python2.7/site-packages/django/core/serializers/json.py", line 81, in Deserializer

objects = json.loads(stream_or_string)

File "/usr/local/lib/python2.7/json/__init__.py", line 339, in loads

return _default_decoder.decode(s)

File "/usr/local/lib/python2.7/json/decoder.py", line 367, in decode

raise ValueError(errmsg("Extra data", s, end, len(s)))

django.core.serializers.base.DeserializationError: Extra data: line 1 column 2 - line 9340 column 1 (char

1 - 184186)

Exception in thread raven-sentry.BackgroundWorker (most likely raised during interpreter shutdown)

仔细看原来是导出的sentry_export.json文件混入了其他的stdout内容:

07:14:23 [WARNING] sentry.utils.geo: settings.GEOIP_PATH_MMDB not configured.

07:14:55 [INFO] sentry.plugins.github: apps-not-configured

>> Beginning export

...

>> Skipping model <Broadcast>

>> Skipping model <CommitAuthor>

>> Skipping model <FileBlob>

>> Skipping model <File>

>> Skipping model <FileBlobIndex>

>> Skipping model <DeletedOrganization>

>> Skipping model <DeletedProject>

>> Skipping model <DeletedTeam>

...

把这些多余的行删除后,才成为合法JSON数据。

然而继续执行又遇到了sql错误:

File "/usr/local/lib/python2.7/site-packages/click/core.py", line 722, in __call__ [633/2067]

return self.main(*args, **kwargs)

File "/usr/local/lib/python2.7/site-packages/click/core.py", line 697, in main

rv = self.invoke(ctx)

File "/usr/local/lib/python2.7/site-packages/click/core.py", line 1066, in invoke

return _process_result(sub_ctx.command.invoke(sub_ctx))

File "/usr/local/lib/python2.7/site-packages/click/core.py", line 895, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "/usr/local/lib/python2.7/site-packages/click/core.py", line 535, in invoke

return callback(*args, **kwargs)

File "/usr/local/lib/python2.7/site-packages/click/decorators.py", line 17, in new_func

return f(get_current_context(), *args, **kwargs)

File "/usr/local/lib/python2.7/site-packages/sentry/runner/decorators.py", line 30, in inner

return ctx.invoke(f, *args, **kwargs)

File "/usr/local/lib/python2.7/site-packages/click/core.py", line 535, in invoke

return callback(*args, **kwargs)

File "/usr/local/lib/python2.7/site-packages/sentry/runner/commands/backup.py", line 16, in import_

obj.save()

File "/usr/local/lib/python2.7/site-packages/django/core/serializers/base.py", line 205, in save

models.Model.save_base(self.object, using=using, raw=True, **kwargs)

File "/usr/local/lib/python2.7/site-packages/django/db/models/base.py", line 838, in save_base

updated = self._save_table(raw, cls, force_insert, force_update, using, update_fields)

File "/usr/local/lib/python2.7/site-packages/django/db/models/base.py", line 905, in _save_table

forced_update)

File "/usr/local/lib/python2.7/site-packages/django/db/models/base.py", line 955, in _do_update

return filtered._update(values) > 0

File "/usr/local/lib/python2.7/site-packages/django/db/models/query.py", line 667, in _update

return query.get_compiler(self.db).execute_sql(CURSOR)

File "/usr/local/lib/python2.7/site-packages/django/db/models/sql/compiler.py", line 1204, in execute_

sql

cursor = super(SQLUpdateCompiler, self).execute_sql(result_type)

File "/usr/local/lib/python2.7/site-packages/django/db/models/sql/compiler.py", line 899, in execute_s

ql

raise original_exception

django.db.utils.IntegrityError: UniqueViolation('duplicate key value violates unique constraint "django_

content_type_app_label_model_76bd3d3b_uniq"\nDETAIL: Key (app_label, model)=(sentry, groupresolution) a

lready exists.\n',)

SQL: UPDATE "django_content_type" SET "app_label" = %s, "model" = %s WHERE "django_content_type"."id" =

%s

我一开始想删JSON里的数据,比如(sentry, groupresolution),搜索groupresolution可以找到对应的对象。可是删了几十条,还是报错,没见有结束的迹象……

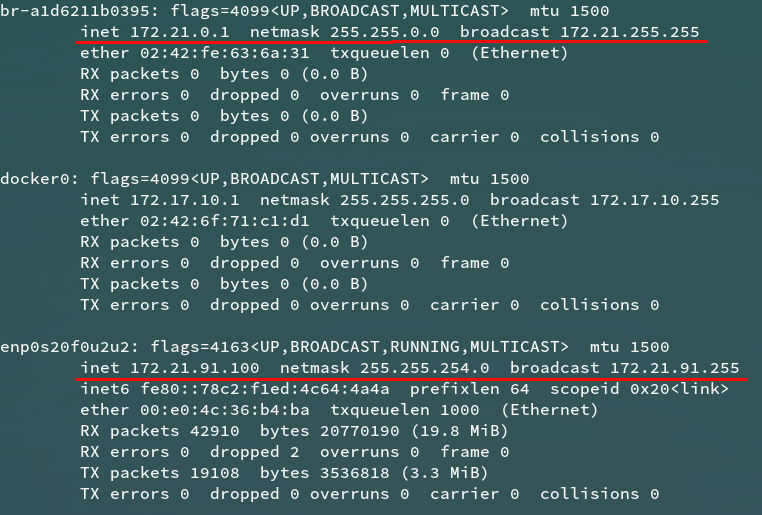

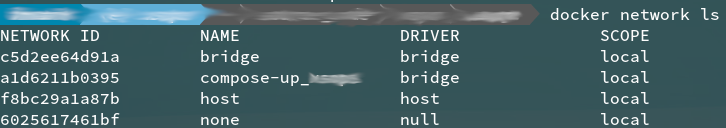

换个思路,我把新sentry的数据库清了吧。先找到postgresql对应的容器id,docker exec -it <container_id> bash进入,psql -U postgres到数据库shell,然后`TRUNCATE TABLE django_content_type`

又报错:

postgres=# TRUNCATE TABLE django_content_type;

ERROR: cannot truncate a table referenced in a foreign key constraint

DETAIL: Table "django_admin_log" references "django_content_type".

HINT: Truncate table "django_admin_log" at the same time, or use TRUNCATE ... CASCADE.

外键约束。。。那没办法了,直接TRUNCATE TABLE django_content_type CASCADE;

NOTICE: truncate cascades to table "django_admin_log"

NOTICE: truncate cascades to table "auth_permission"

NOTICE: truncate cascades to table "auth_group_permissions"

TRUNCATE TABLE

再次导入,成功了。